NVIDIA Grove Streamlines Complex AI Inference on Kubernetes

Published on November 10, 2025 at 12:00 AM

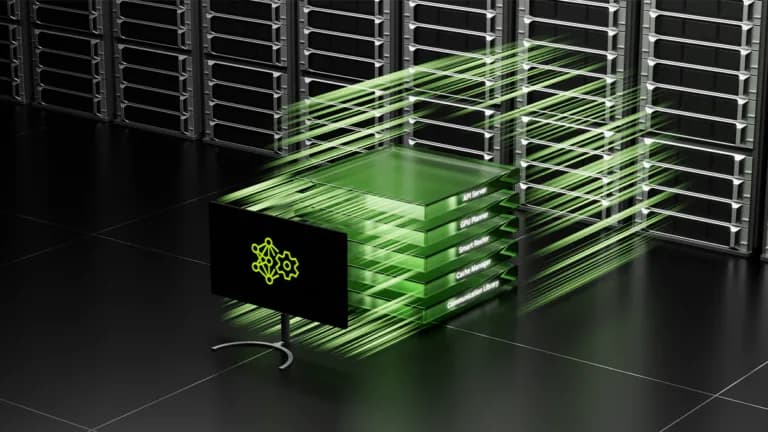

NVIDIA announced on November 10, 2025, the availability of NVIDIA Grove within NVIDIA Dynamo as a modular component. NVIDIA Grove is a Kubernetes API designed to streamline modern Machine Learning (ML) inference workloads on Kubernetes clusters.

Grove addresses the challenges of managing complex, multi-component AI inference systems by enabling users to scale multinode inference deployments, supporting tens of thousands of GPUs. Grove is fully open source and available on the ai-dynamo/grove GitHub repo.

Key features of NVIDIA Grove include:

- Multilevel Autoscaling: Scales individual components, related component groups, and entire service replicas.

- System-Level Lifecycle Management: Manages recovery and updates on complete service instances.

- Flexible Hierarchical Gang Scheduling: Supports policies that enforce minimum viable component combinations while enabling flexible scaling.

- Topology-Aware Scheduling: Optimizes component placement based on network topology.

- Role-Aware Orchestration: Enforces role-specific configuration and dependency for reliable initialization.

- PodCliques: Represent groups of Kubernetes pods with specific roles and independent configuration.

- PodCliqueScalingGroups: Bundle tightly coupled PodCliques that scale together.

- PodCliqueSets: Define the entire multicomponent workload, specifying startup ordering and scaling policies.